Roboticists develop man-made mechanical devices that can move by themselves, whose motion must be modelled, planned, sensed, actuated and controlled, and whose motion behaviour can be influenced by “programming”. Robots are called “intelligent” if they succeed in moving in safe interaction with an unstructured environment, while autonomously achieving their specified tasks.

This definition implies that a device can only be called a “robot” if it contains a movable mechanism, influenced by sensing, planning, actuation and control components. It does not imply that a minimum number of these components must be implemented in software, or be changeable by the “consumer” who uses the device; for example, the motion behaviour can have been hard-wired into the device by the manufacturer.

So, the presented definition, as well as the rest of the material in this part of the WEBook, covers not just “pure” robotics or only “intelligent” robots, but rather the somewhat broader domain of robotics and automation. This includes “dumb” robots such as: metal and woodworking machines, “intelligent” washing machines, dish washers and pool cleaning robots, etc. These examples all have sensing, planning and control, but often not in individually separated components. For example, the sensing and planning behaviour of the pool cleaning robot have been integrated into the mechanical design of the device, by the intelligence of the human developer.

Robotics is, to a very large extent, all about system integration, achieving a task by an actuated mechanical device, via an “intelligent” integration of components, many of which it shares with other domains, such as systems and control, computer science, character animation, machine design, computer vision, artificial intelligence, cognitive science, biomechanics, etc. In addition, the boundaries of robotics cannot be clearly defined, since also its “core” ideas, concepts and algorithms are being applied in an ever increasing number of “external” applications, and, vice versa, core technology from other domains (vision, biology, cognitive science or biomechanics, for example) are becoming crucial components in more and more modern robotic systems.

This part of the WEBook makes an effort to define what exactly is that above-mentioned core material of the robotics domain, and to describe it in a consistent and motivated structure. Nevertheless, this chosen structure is only one of the many possible “views” that one can want to have on the robotics domain.

In the same vein, the above-mentioned “definition” of robotics is not meant to be definitive or final, and it is only used as a rough framework to structure the various chapters of the WEBook. (A later phase in the WEBook development will allow different “semantic views” on the WEBook material.)

Components of robotic systems

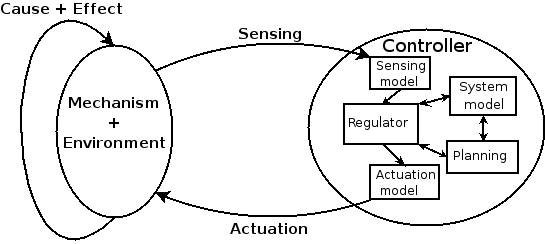

This figure depicts the components that are part of all robotic systems. The purpose of this Section is to describe the semantics of the terminology used to classify the chapters in the WEBook: “sensing”, “planning”, “modelling”, “control”, etc.

The real robot is some mechanical device (“mechanism”) that moves around in the environment, and, in doing so, physically interacts with this environment. This interaction involves the exchange of physical energy, in some form or another. Both the robot mechanism and the environment can be the “cause” of the physical interaction through “Actuation”, or experience the “effect” of the interaction, which can be measured through “Sensing”.

Robotics as an integrated system of control interacting with the physical world.

Sensing and actuation are the physical ports through which the “Controller” of the robot determines the interaction of its mechanical body with the physical world. As mentioned already before, the controller can, in one extreme, consist of software only, but in the other extreme everything can also be implemented in hardware.

Within the Controller component, several sub-activities are often identified:

Modelling. The input-output relationships of all control components can (but need not) be derived from information that is stored in a model. This model can have many forms: analytical formulas, empirical look-up tables, fuzzy rules, neural networks, etc.

The name “model” often gives rise to heated discussions among different research “schools”, and the WEBook is not interested in taking a stance in this debate: within the WEBook, “model” is to be understood with its minimal semantics: “any information that is used to determine or influence the input-output relationships of components in the Controller.”

The other components discussed below can all have models inside. A “System model” can be used to tie multiple components together, but it is clear that not all robots use a System model. The “Sensing model” and “Actuation model” contain the information with which to transform raw physical data into task-dependent information for the controller, and vice versa.

Planning. This is the activity that predicts the outcome of potential actions, and selects the “best” one. Almost by definition, planning can only be done on the basis of some sort of model.

Regulation. This component processes the outputs of the sensing and planning components, to generate an actuation setpoint. Again, this regulation activity could or could not rely on some sort of (system) model.

The term “control” is often used instead of “regulation”, but it is impossible to clearly identify the domains that use one term or the other. The meaning used in the WEBook will be clear from the context.

Scales in robotic systems

The above-mentioned “components” description of a robotic system is to be complemented by a “scale” description, i.e., the following system scales have a large influence on the specific content of the planning, sensing, modelling and control components at one particular scale, and hence also on the corresponding sections of the WEBook.

Mechanical scale. The physical volume of the robot determines to a large extent the limites of what can be done with it. Roughly speaking, a large-scale robot (such as an autonomous container crane or a space shuttle) has different capabilities and control problems than amacro robot (such as an industrial robot arm), a desktop robot (such as those “sumo” robots popular with hobbyists), or milli micro or nano robots.

Spatial scale. There are large differences between robots that act in 1D, 2D, 3D, or 6D (three positions and three orientations).

Time scale. There are large differences between robots that must react within hours, seconds, milliseconds, or microseconds.

Power density scale. A robot must be actuated in order to move, but actuators need space as well as energy, so the ratio between both determines some capabilities of the robot.

System complexity scale. The complexity of a robot system increases with the number of interactions between independent sub-systems, and the control components must adapt to this complexity.

Computational complexity scale. Robot controllers are inevitably running on real-world computing hardware, so they are constrained by the available number of computations, the available communication bandwidth, and the available memory storage.

Obviously, these scale parameters never apply completely independently to the same system. For example, a system that must react at microseconds time scale can not be of macro mechanical scale or involve a high number of communication interactions with subsystems.

Background sensitivity

Finally, no description of even scientific material is ever fully objective or context-free, in the sense that it is very difficult for contributors to the WEBook to “forget” their background when writing their contribution. In this respect, robotics has, roughly speaking, two faces: (i) the mathematical and engineering face, which is quite “standardized” in the sense that a large consensus exists about the tools and theories to use (“systems theory”), and (ii) the AI face, which is rather poorly standardized, not because of a lack of interest or research efforts, but because of the inherent complexity of “intelligent behaviour.” The terminology and systems-thinking of both backgrounds are significantly different, hence the WEBook will accomodate sections on the same material but written from various perspectives. This is not a “bug”, but a“feature”: having the different views in the context of the same WEBook can only lead to a better mutual understanding and respect.

Research in engineering robotics follows the bottom-up approach: existing and working systems are extended and made more versatile. Research in artificial intelligence robotics is top-down: assuming that a set of low-level primitives is available, how could one apply them in order to increase the “intelligence” of a system. The border between both approaches shifts continuously, as more and more “intelligence” is cast into algorithmic, system-theoretic form. For example, the response of a robot to sensor input was considered “intelligent behaviour” in the late seventies and even early eighties. Hence, it belonged to A.I. Later it was shown that many sensor-based tasks such as surface following or visual tracking could be formulated as control problems with algorithmic solutions. From then on, they did not belong to A.I. any more.

The acclaimed Czech playwright Karel Capek (1890-1938) made the first use of the word ‘robot’, from the Czech word for forced labor or serf. Capek was reportedly several times a candidate for the Nobel prize for his works and very influential and prolific as a writer and playwright.

The acclaimed Czech playwright Karel Capek (1890-1938) made the first use of the word ‘robot’, from the Czech word for forced labor or serf. Capek was reportedly several times a candidate for the Nobel prize for his works and very influential and prolific as a writer and playwright. The word 'robotics' was first used in Runaround, a short story published in 1942, by Isaac Asimov (born Jan. 2, 1920, died Apr. 6, 1992). I, Robot, a collection of several of these stories, was published in 1950.

The word 'robotics' was first used in Runaround, a short story published in 1942, by Isaac Asimov (born Jan. 2, 1920, died Apr. 6, 1992). I, Robot, a collection of several of these stories, was published in 1950. After the technology explosion during World War II, in 1956, a historic meeting occurs between George C. Devol, a successful inventor and entrepreneur, and engineer Joseph F. Engelberger, over cocktails the two discuss the writings of Isaac Asimov.

After the technology explosion during World War II, in 1956, a historic meeting occurs between George C. Devol, a successful inventor and entrepreneur, and engineer Joseph F. Engelberger, over cocktails the two discuss the writings of Isaac Asimov. The image of the "electronic brain" as the principal part of the robot was pervasive. Computer scientists were put in charge of robot departments of robot customers and of factories of robot makers. Many of these people knew little about machinery or manufacturing but assumed that they did.

The image of the "electronic brain" as the principal part of the robot was pervasive. Computer scientists were put in charge of robot departments of robot customers and of factories of robot makers. Many of these people knew little about machinery or manufacturing but assumed that they did.

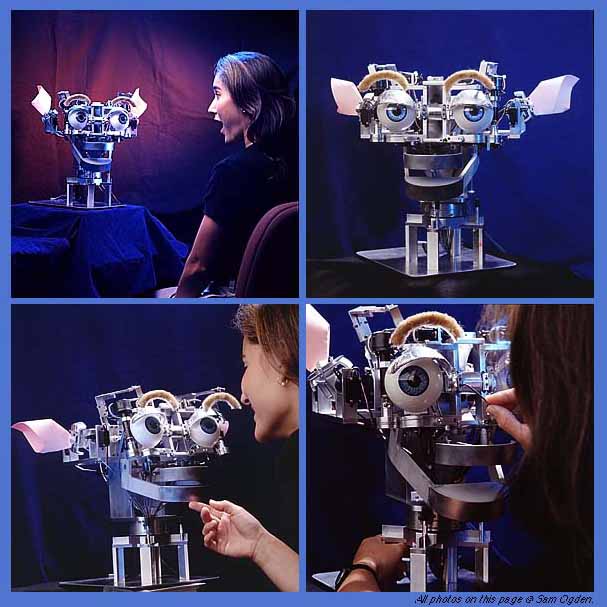

Kismet has a repertoire of responses driven by emotive and behavioral systems. The hope is that Kismet will be able to build upon these basic responses after it is switched on or "born", learn all about the world and become intelligent.

Kismet has a repertoire of responses driven by emotive and behavioral systems. The hope is that Kismet will be able to build upon these basic responses after it is switched on or "born", learn all about the world and become intelligent.

Machine vision involves devices which sense images and processes which interpret the images. Thomas Braunl of The University of Western Australia provides an excellent example of robotic vision research in both hardware and software.

Machine vision involves devices which sense images and processes which interpret the images. Thomas Braunl of The University of Western Australia provides an excellent example of robotic vision research in both hardware and software.

Improv is a tool for basic real time image processing with low resolution, e.g. suitable for mobile robots. It has been developed for PCs with Linux operating system. Improv works with a number of inexpensive low-resolution digital cameras (no framegrabber required), and is available from Joker Robotics. Improv displays the live camera image in the first window, while subsequent image operations can be applied to this image in five more windows. For each sub-window, a sequence of image processing routines may be specified.

Improv is a tool for basic real time image processing with low resolution, e.g. suitable for mobile robots. It has been developed for PCs with Linux operating system. Improv works with a number of inexpensive low-resolution digital cameras (no framegrabber required), and is available from Joker Robotics. Improv displays the live camera image in the first window, while subsequent image operations can be applied to this image in five more windows. For each sub-window, a sequence of image processing routines may be specified.